Mark IIb and breaking the 20 Gflops barrier

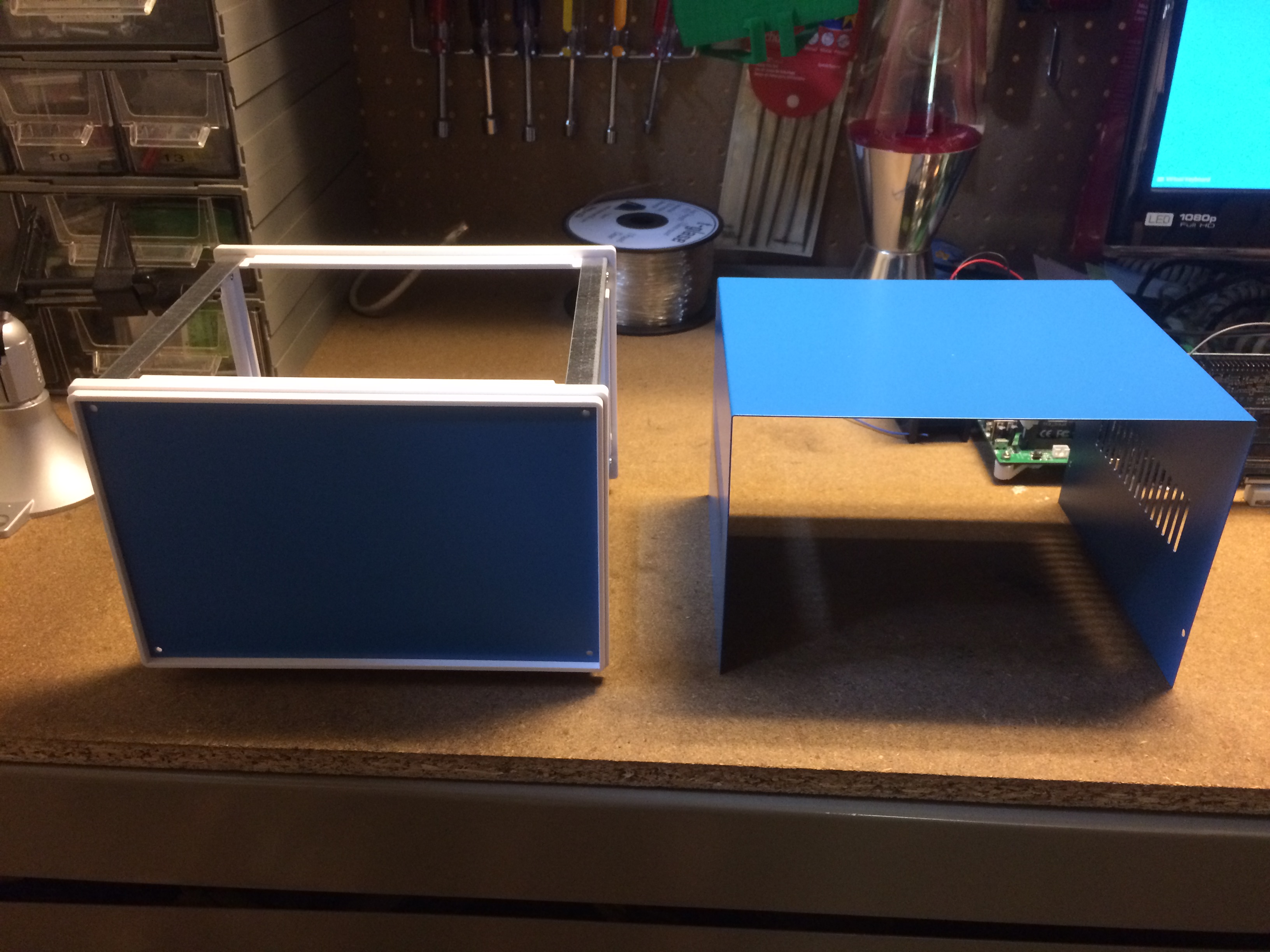

Now that Mark II has been operational more regularly, I’ve noticed that working on it in the current case is really inconvenient. Something with a removable top would make a lot more sense (which is why machines of a similar design had this feature), but when I started designing Mark II I couldn’t find anything like that which would work with the original full-size PINE A64-based design. After switching to the Clusterboard, I took another look and found a case with a removable top that might work. I over-analyzed the specs and finally broke-down and just ordered one to measure the fit directly.  The new case is considerably smaller in the “horizontal” direction, larger in the “vertical” one (I put these in quotes because the way length/width/height are measured makes this difficult). The Clusterboard and one A64 do fit inside this new case, but it’s a pretty tight fit.

The new case is considerably smaller in the “horizontal” direction, larger in the “vertical” one (I put these in quotes because the way length/width/height are measured makes this difficult). The Clusterboard and one A64 do fit inside this new case, but it’s a pretty tight fit.  I took this downtime opportunity to try a few other modifications I’d been putting-off while the machine was working. The first was to try a different Linux build to test my theories about performance limitations found previously. I’ve made a few attempts at “swapping out” the kernel of the Armbian images I’ve been using on the cluster nodes but the only result was “bricking” a couple of them. After that I decided it was worth trying the “stock” Ubuntu build that PINE64 provides for the SOPINE module just to see what happens. I thought I read that this would be a non-starter due to issues with the network interface and the Clusterboard, but figured it was worth a shot. As it turned out, the image worked fine. I was able to boot-up a single node using the new image, configure it for the cluster and run HPL on it with no obvious problems. I was however able to see that thermal management was working (unlike the previous build) and it was throttling-down the CPU to keep overheating at bay. This gave me a reason to try some active thermal management. I’d picked-up some fat little copper heatsinks for the SOPINE modules awhile back, but since the previous Linux build didn’t produce any temperature feedback it wasn’t clear that they would make any difference. Now that I can see the CPU getting throttled due to overheating it makes sense to give these a try.

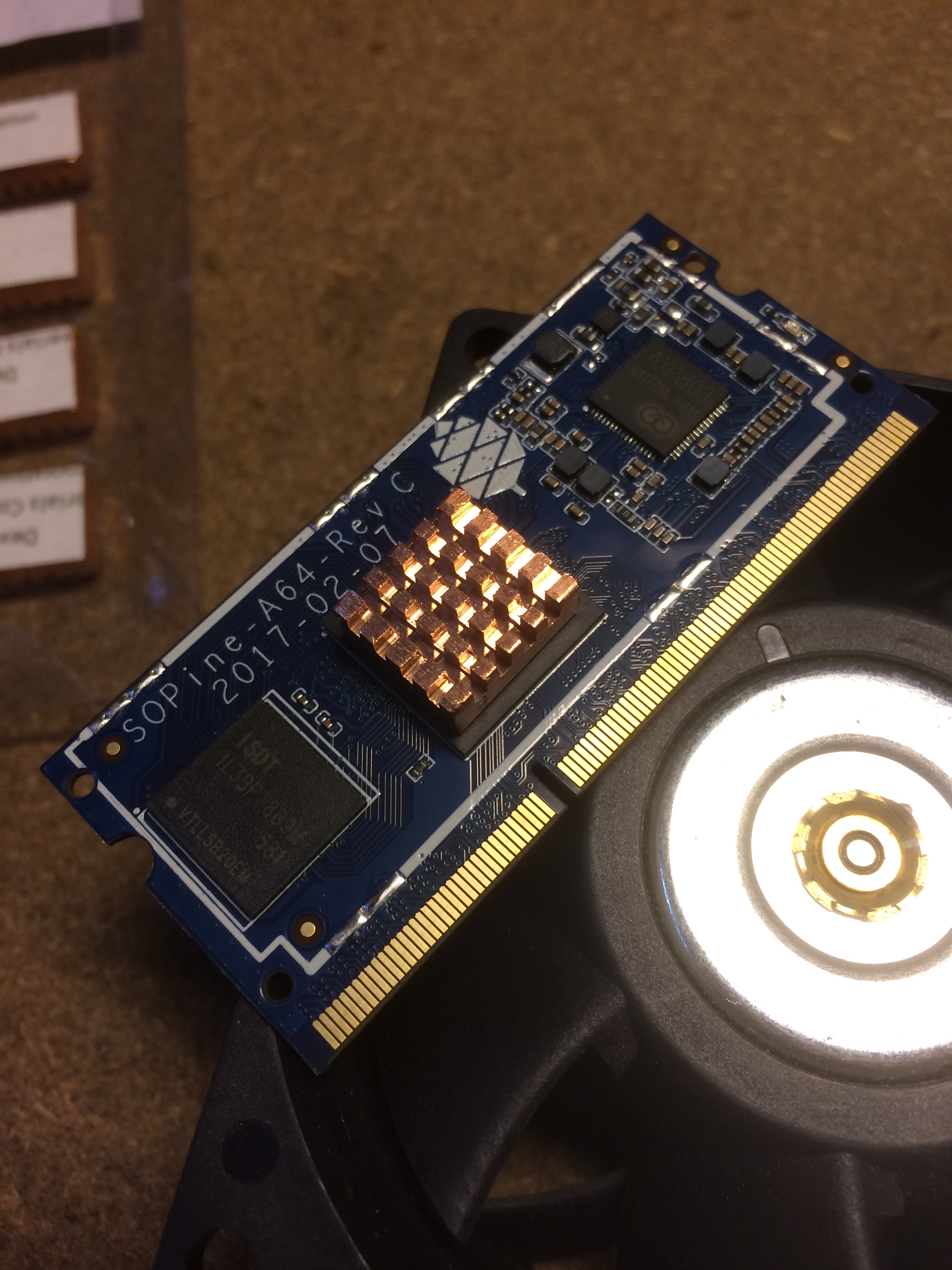

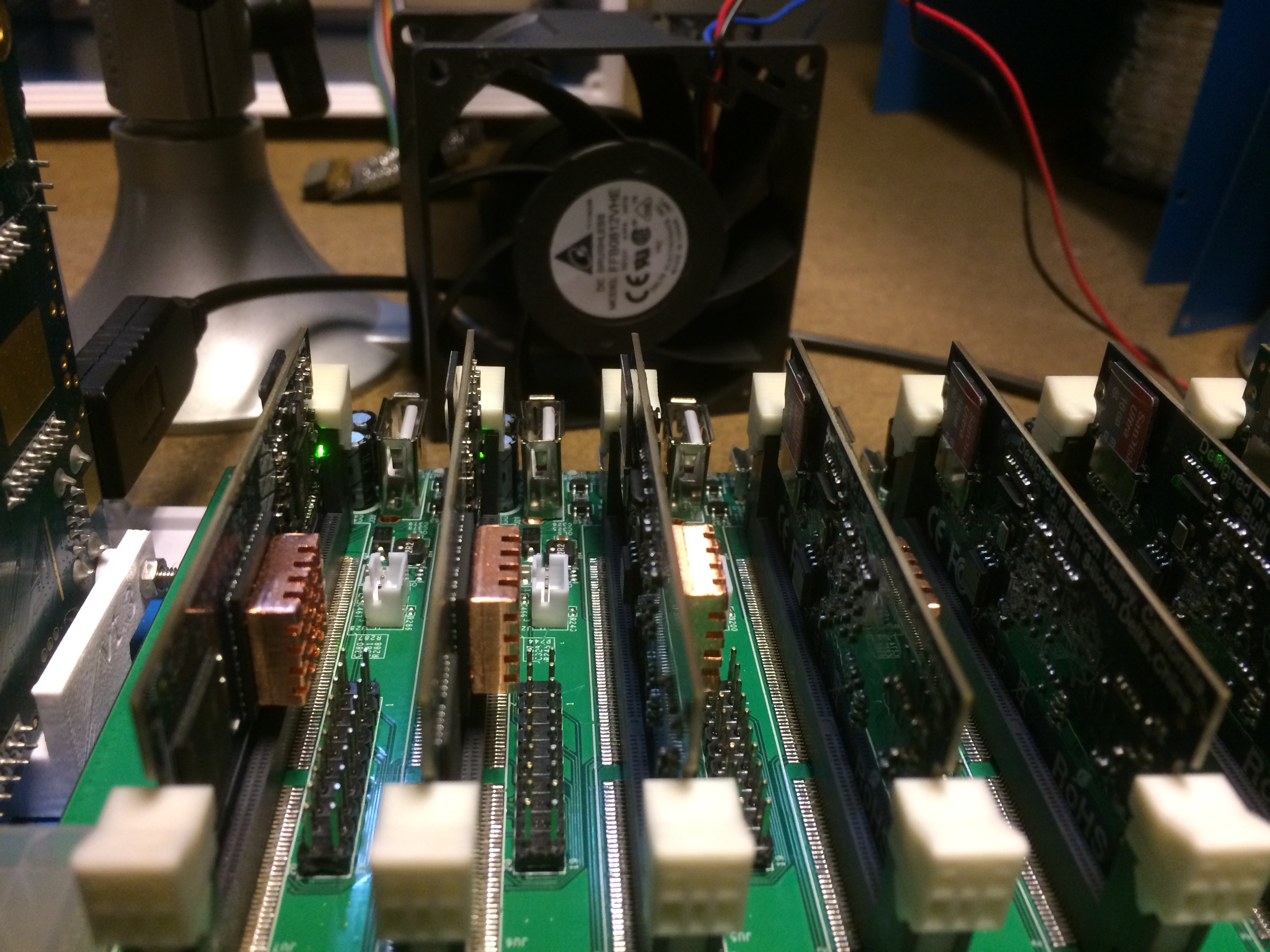

I took this downtime opportunity to try a few other modifications I’d been putting-off while the machine was working. The first was to try a different Linux build to test my theories about performance limitations found previously. I’ve made a few attempts at “swapping out” the kernel of the Armbian images I’ve been using on the cluster nodes but the only result was “bricking” a couple of them. After that I decided it was worth trying the “stock” Ubuntu build that PINE64 provides for the SOPINE module just to see what happens. I thought I read that this would be a non-starter due to issues with the network interface and the Clusterboard, but figured it was worth a shot. As it turned out, the image worked fine. I was able to boot-up a single node using the new image, configure it for the cluster and run HPL on it with no obvious problems. I was however able to see that thermal management was working (unlike the previous build) and it was throttling-down the CPU to keep overheating at bay. This gave me a reason to try some active thermal management. I’d picked-up some fat little copper heatsinks for the SOPINE modules awhile back, but since the previous Linux build didn’t produce any temperature feedback it wasn’t clear that they would make any difference. Now that I can see the CPU getting throttled due to overheating it makes sense to give these a try.  Using the heatsinks alone didn’t reduce the incidence of throttling much, so I coupled that with a (very janky) fan setup and tested again. This made a big difference, and in fact completely eliminated the CPU throttling messages from the logs.

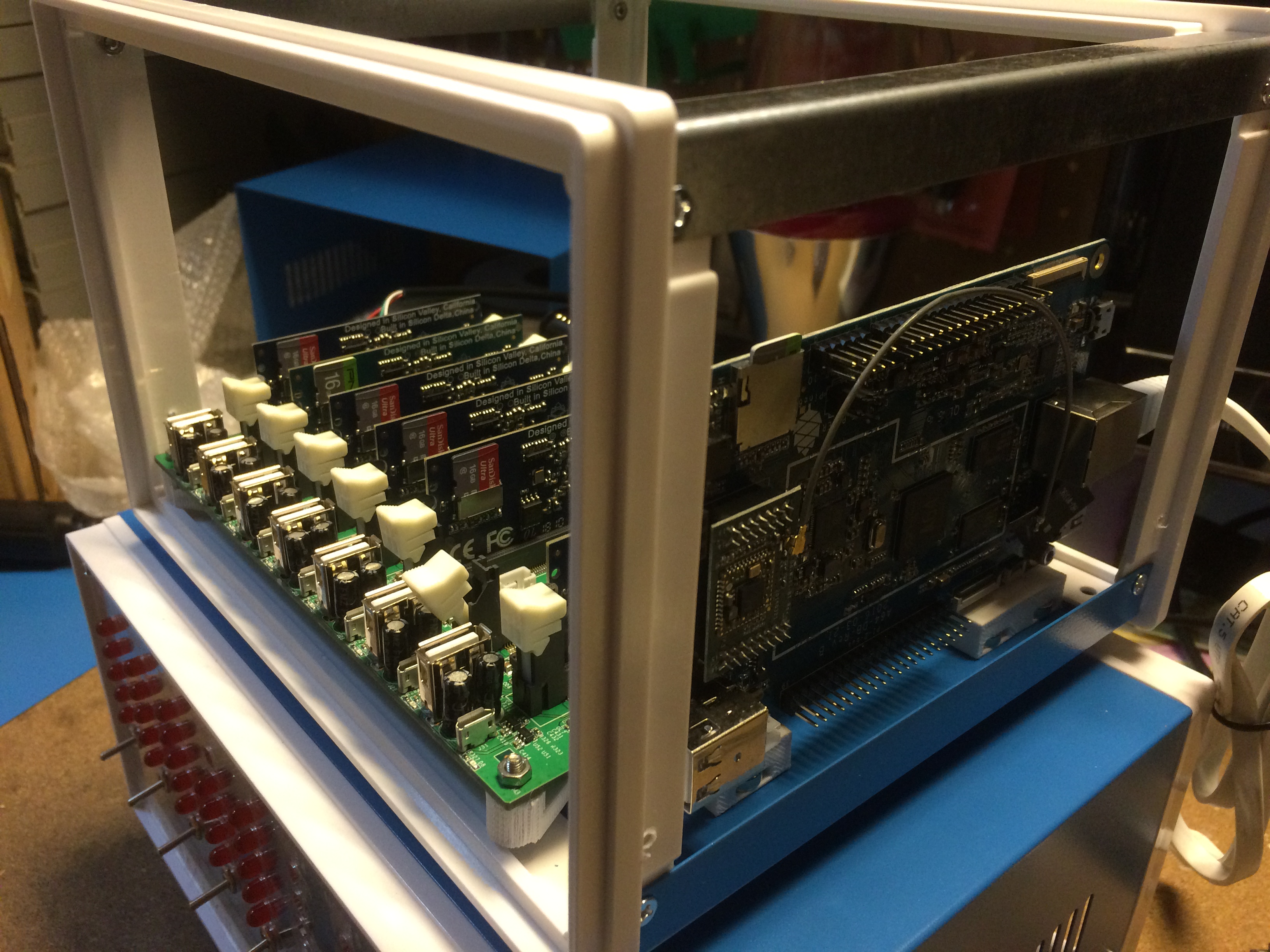

Using the heatsinks alone didn’t reduce the incidence of throttling much, so I coupled that with a (very janky) fan setup and tested again. This made a big difference, and in fact completely eliminated the CPU throttling messages from the logs.  This looked pretty good so I pull the rest of the cluster nodes, re-imaged their SD cards and installed heatsinks. The only problem I ran into brining these on-line was the hard-coded MAC address in the O/S image which resulted in only one of the nodes being accessible via the network. Once I identified the problem it was a straightforward fix to make each node re-generate it’s MAC address and they all came-up correctly. Now that I had a recipe for setting-up the cluster nodes to run HPL, doing so went pretty smooth and I was able to run a full-power test in short order. The results speak for themselves; 22.12 Gflops. This is still a ways from my target of 50 Gflops, but overcoming the previous ceiling is encouraging, and it’s especially encouraging because I was able to correctly identify the cause of the limitation. Next steps will be to spend some time tuning the contents of this new O/S image as well as tuning the overall configuration now that the cluster nodes can run at full clock speed (or perhaps beyond…?). I’m also very happy with how the new case is working out, and I’m planning on re-designing the front panel to fit the new case. This design will be called “Mark IIb”, and I have a number of changes in mind beyond simply resizing the panel, but more on that later…

This looked pretty good so I pull the rest of the cluster nodes, re-imaged their SD cards and installed heatsinks. The only problem I ran into brining these on-line was the hard-coded MAC address in the O/S image which resulted in only one of the nodes being accessible via the network. Once I identified the problem it was a straightforward fix to make each node re-generate it’s MAC address and they all came-up correctly. Now that I had a recipe for setting-up the cluster nodes to run HPL, doing so went pretty smooth and I was able to run a full-power test in short order. The results speak for themselves; 22.12 Gflops. This is still a ways from my target of 50 Gflops, but overcoming the previous ceiling is encouraging, and it’s especially encouraging because I was able to correctly identify the cause of the limitation. Next steps will be to spend some time tuning the contents of this new O/S image as well as tuning the overall configuration now that the cluster nodes can run at full clock speed (or perhaps beyond…?). I’m also very happy with how the new case is working out, and I’m planning on re-designing the front panel to fit the new case. This design will be called “Mark IIb”, and I have a number of changes in mind beyond simply resizing the panel, but more on that later…

Comments

TheSec: Isn’t the 22 Gflops the Rpeak according to your own post ? or was the 22 Gflops Rpeak based on 408Mhz.

Jason J. Gullickson: That’s correct, the 22 Gflops Rpeak was calculated based on the 408Mhz clock speed limit set by the previous build. Now that the system can reliably run at 1.15Ghz, the Rmax should be 64 Gflops. If I can achieve about 80% efficiency (which I think is reasonable) the Rpeak should be about 50 Gflops so this is my target for this configuration.